When building MCP servers, you quickly realize something: the devil really is in the details.

One issue we’ve encountered is how differently LLMs and agent frameworks handle optional and nullable parameters in MCP server tools.

At first glance, this might sound like a subtle implementation detail. But as we learned—through multiple iterations across OpenAI, Anthropic, and others—the way parameters are defined and enforced can make or break compatibility with different model providers, frameworks, and MCP clients.

The Context: MCP Servers and Tool Calls

The Model Context Protocol (MCP) is emerging as a standard for plugging external tools into AI agents. Think of it as a contract: tools expose capabilities, LLMs call them with arguments, and the MCP server executes the tool call.

But the contract only works if everyone agrees on what the arguments look like. In practice, that’s where things can fall apart.

When an agent wants to call an MCP tool, it must provide input arguments. For example, if your tool expects:

email_address: z.string()

age: z.number()…then the LLM connected to your agent has to supply values for email_address and age.

By default, LLMs generate these arguments the same way they generate text: by predicting tokens step by step. In other words, the model will “free-form guess” a JSON object that looks like it matches your schema.

Now let’s take a look at a tool in our Skyfire MCP server. Its evolution shows how differences in how LLM models generate and treat arguments can cause real problems in practice.

The Problem with Optional

Our Skyfire MCP server exposes a tool called create-kya-payment-token. In the original version of the server, it took three parameters– an amount, a seller service id to designate who the payment was for, and a buyer tag which enabled the buyer agent to associate its own tag with the particular token transaction.

Code Snapshot (Initial State):

this.server.tool(

'create-kya-payment-token',

`This tool takes amount and sellerServiceId to create a KYA+PAY token (JWT) for a transaction. It returns generated KYA+PAY token (JWT).

KYA+PAY token stands for know-your-agent and payment token. Whenever KYA+PAY token is generated it actually deducts money from the linked wallet and can be used to create an account while paying.

`,

{

amount: z.string().describe('dollar value of the token'),

sellerServiceId: z.string().describe('ID of connected seller'),

buyerTag: z

.string()

.uuid()

.describe('unique buyer agent identification (uuid)')

},

async (params: {

amount: string

buyerTag: string

sellerServiceId: string

}): Promise<any> => {

return await createKYAPAYToken(params, this.apiKey)

}

)Turns out, frameworks like Autogen can’t produce UUIDv4 strings reliably. To accommodate them, we made the buyerTag optional.

this.server.tool(

'create-kya-payment-token',

`This tool takes amount and sellerServiceId to create a KYA+PAY token (JWT) for a transaction. It returns generated KYA+PAY token (JWT).

KYA+PAY token stands for know-your-agent and payment token. Whenever KYA+PAY token is generated it actually deducts money from the linked wallet and can be used to create an account while paying.

`,

{

amount: z.string().describe('dollar value of the token'),

sellerServiceId: z.string().describe('ID of connected seller'),

buyerTag: z

.string()

.optional()

.describe('unique buyer agent identification'),

},

async (params: {

amount: string

sellerServiceId: string

buyerTag?: string

}): Promise<any> => {

return await createKYAPAYToken(params, this.apiKey)

}

)We soon ran into a case where a Vercel AI SDK agent using GPT-4o called this tool and got back an error. Why?

Earlier, we discussed how an agent (like Vercel AI SDK + GPT-4o) calls an MCP tool. The agent’s LLM typically generates inputs that look like they match the tool schema. And different LLM frameworks provide different guardrails that force the model to return a JSON matching the schema.

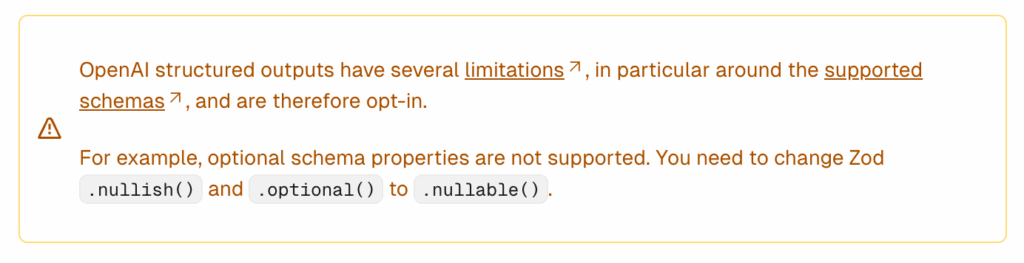

OpenAI provides a feature called structured outputs. If you enable this feature, the LLM is forced to generate a JSON object that matches your Zod schema– except for a few key limitations.

If an MCP tool has any optional argument, any agent running with OpenAI structured outputs will fail when calling it.

With the amount of agents today that rely on OpenAI models, we could not afford to ignore this restriction. We had to design the tool to stay broadly accessible.

So, we made buyerTag a .nullable() argument instead of .optional(), per the OpenAI requirement.

Now, our tool looked like this:

this.server.tool(

'create-kya-payment-token',

`This tool takes amount and sellerServiceId to create a KYA+PAY token (JWT) for a transaction. It returns generated KYA+PAY token (JWT).

KYA+PAY token stands for know-your-agent and payment token. Whenever KYA+PAY token is generated it actually deducts money from the linked wallet and can be used to create an account while paying.

`,

{

amount: z.string().describe('dollar value of the token'),

sellerServiceId: z.string().describe('ID of connected seller'),

buyerTag: z

.string()

.nullable()

.describe('unique buyer agent identification'),

},

async (params: {

amount: string

sellerServiceId: string

buyerTag?: string

}): Promise<any> => {

return await createKYAPAYToken(params, this.apiKey)

}

)The Problem with Nullable

With the newfound realization that nullable arguments were more OpenAI agent-friendly, we added some additional nullable input arguments to our create-kya-payment-token tool:

expiresAt: z.number().nullable()

identityPermissions: z.array(z.enum(IDENTITY_PERMISSIONS)).nullable()As soon as these changes were deployed to our MCP server, we saw invalid tool calls left and right. Instead of passing actual null values for the nullable fields, agents using Anthropic LLMs would pass the string ”null” into the tool. This broke things immediately for expiresAt, which expects a number, and identityPermissions, which expects an array.

Anthropic was not the only one with a problem with nullable parameters– Autogen (previously unable to generate valid UUIDV4s), also was unable to make tool calls involving nullable parameters. This was brought to our attention by another one of our partners, who reported:

The problem is that Skyfire is using JSON Schema with array types like “type”: [“string”, “null”] and anyOf constructs, which AutoGen doesn’t handle well.

The Simplest Solution Wins

At this point, we had wised up. If we really wanted any agent – regardless of framework or model limitations – to reliably call our MCP server, we had to stick to required arguments only, and keep them simple enough for an LLM to generate consistently.

This resulted in our current tool call schema:

this.server.tool(

'create-kya-payment-token',

`This tool takes amount and sellerServiceId to create a KYA+PAY token (JWT) for a transaction. It returns generated KYA+PAY token (JWT).

KYA+PAY token stands for know-your-agent and payment token. Whenever KYA+PAY token is generated it actually deducts money from the linked wallet and can be used to create an account while paying.

`,

{

amount: z.string().describe('dollar value of the token'),

sellerServiceId: z.string().describe('ID of connected seller')

},

async (params: {

amount: string

sellerServiceId: string

}): Promise<any> => {

return await createKYAPAYToken(params, this.apiKey)

}

)It removed any optional/nullable arguments and provided only the necessary information to generate a valid token. Now, it is fully compatible with all AI models, frameworks, and is extremely easy to call with only two arguments.

Lessons Learned

Through this journey, we uncovered several key lessons:

Spec != Reality

Just because JSON Schema supports optional and nullable arguments doesn’t mean every LLM and agent framework does. Treat specs as ideals, but test with real LLMs, AI SDKs and MCP clients.

LLMs Prefer Explicitness

OpenAI models especially don’t handle optional arguments well. They want to see required arguments in schemas. Anthropic doesn’t seem to be able to pass null arguments properly and prefers passing non-null arguments.

Frameworks Differ Wildly

What works in OpenAI may break in Anthropic, and what works in both may break in Autogen. Compatibility is not uniform.

Pragmatism Beats Perfection

Sometimes the cleanest solution is to remove optional arguments entirely, especially if they don’t add essential value.

The Takeaway

The world of LLMs, MCP servers and agent frameworks are still young. The standards are evolving, and every platform—from OpenAI to Autogen to Anthropic to Vercel AI—makes different assumptions about schema enforcement.

The most important principle we’ve learnt is to opt for simplicity and explicitness. If an argument isn’t essential, don’t include it. If it is essential, make it required. Until all models can support the parts of the Zod schema you want to use, it’s best to stick to this approach.

By minimizing optionality, you maximize compatibility and simplicity for agents.

At Skyfire, we’re continuing to test across frameworks and contribute these learnings back to the community. If you’ve faced similar schema or compatibility issues, we’d love to hear from you.

P.S. This was not the end of our MCP troubles. In integrating with AWS strands, we found that the MCP client tool from their strands-tool library was unable to call tools that had no parameters listed. We had defined one such tool called find-seller that just returned the entirety of our registered services for the agent to parse, but the MCP client threw an error when no argument was supplied to the tool call. In order to fix this we added a search parameter, which we will use as soon as we build the search index over our services.